It’s challenging to measure education and training. After conducting a training session, trainers must determine its exact effectiveness.

How much did the participants learn? Can they use their new learnings in their job? What knowledge gaps do they have?

A training evaluation model helps to answer these questions. It is a systematic approach to measuring the effectiveness of training interventions.

This article will start by defining what a training evaluation model is, and how it is an important part of effective training overall. We’ll then take a look at the five important models – describing how they work, their advantages, and their limitations.

What is a training evaluation model?

A training evaluation model provides a structured approach to assess the effectiveness of a training program.

The aim is to determine the extent to which participants acquire the intended knowledge, skills, attitudes, and behaviors during training.

This can help stakeholders make decisions about improving or revising the training, as well as allocating resources.

In a business context, that happens after employee onboarding, compliance training, leadership development, or other continual professional development.

What are the five fundamental training evaluation models?

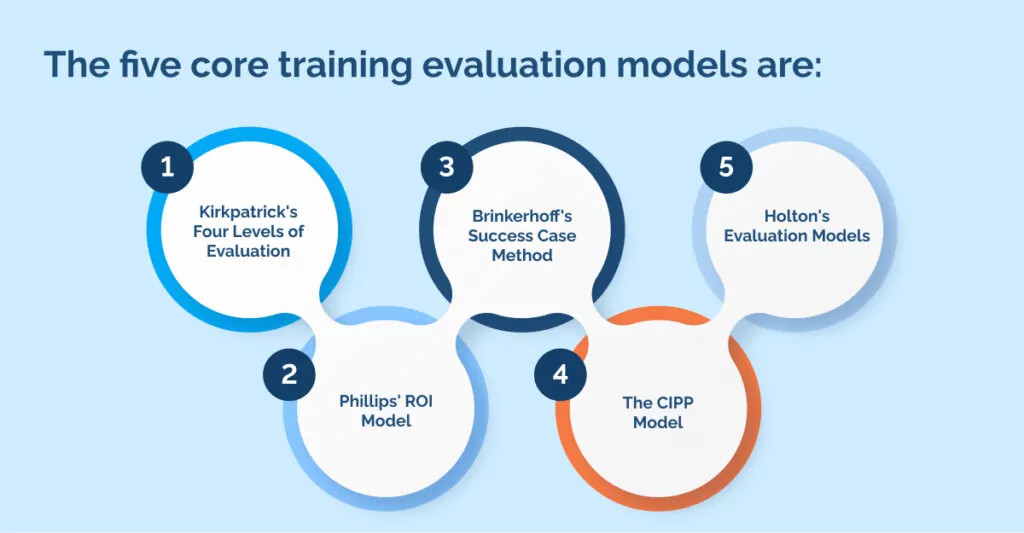

The five core training evaluation models are:

- Kirkpatrick’s Four Levels of Evaluation

- Phillips’ ROI Model

- Brinkerhoff’s Success Case Method

- The CIPP Model

- Holton’s Evaluation Models

These models are proven approaches to training evaluation. They have been thoroughly researched. They are very adaptable. And they will be useful even in the future of business training trends.

Kirkpatrick’s Four Levels of Evaluation

This widely recognized model is a cornerstone in the field of training evaluation and offers a structured framework for organizations to measure training impact.

By examining the four distinct levels of evaluation, organizations gain insights into reactions, learning, behavior, and results, ultimately helping them make data-driven decisions for improving training initiatives.

How it works

Kirkpatrick’s evaluation model assesses training effectiveness on four distinct levels:

- Reaction

The first level measures participants’ immediate reactions to the training, gauging their satisfaction and perceived relevance. This is often done through surveys and feedback forms.

- Learning

The second level evaluates the knowledge and skills acquired during training. It involves pre- and post-training assessments to quantify the extent of learning.

- Behavior

Level three delves into the application of newly acquired knowledge and skills in the workplace. It assesses the changes in participants’ behavior as a result of training.

- Results

The fourth level focuses on the impact of training on organizational outcomes, such as improved productivity, reduced costs, or increased revenue. It looks at whether the training has met its intended objectives.

Advantages of Kirkpatrick’s Four Levels

This model offers a holistic view of training effectiveness, from initial reactions to the ultimate impact on an organization’s success.

It provides a structured framework for evaluating training, making it easier to collect and analyze data.

And fundamentally, Kirkpatrick’s model supports alignment with organizational goals, linking training programs to the organization’s strategic objectives.

Limitations of Kirkpatrick’s Four Levels

However, there are also some downsides to Kirkpatrick’s model.

Gathering data for all four levels can be time-consuming and resource-intensive. Attributing organizational results solely to training can be complex, as various factors may contribute to outcomes.

The model tends to prioritize quantitative data over qualitative insights, potentially missing valuable information about the training’s impact.

Phillips’ ROI Model

For organizations seeking to quantify the financial outcomes of their training, Phillips’ Return on Investment (ROI) Model is a vital tool.

It is an excellent way to link training directly to the bottom line. By evaluating participants’ reactions, learning outcomes, application, and ultimately, the financial returns, this model offers a comprehensive perspective on training impact, making it a valuable choice for stakeholders looking for a clear ROI.

How it works:

Phillips’ Return on Investment (ROI) Model is a comprehensive approach to evaluating training programs with a focus on financial outcomes. It involves a five-level process:

- At Level 1, reactions and planned actions are assessed through participant feedback, measuring initial impressions and intentions to apply the training.

- Level 2 evaluates learning outcomes by comparing the knowledge and skills of participants before and after training through assessments and tests.

- Level 3 delves into application and implementation, monitoring how well participants apply what they’ve learned in their work roles, often involving observations and self-assessments.

- At Level 4, the model scrutinizes business impact. That means it has specific objectives such as increased sales, reduced costs, or improved customer satisfaction. This level collects data to quantify results.

- Finally, Level 5 calculates the ROI by comparing the monetary benefits of training to its total costs.

Advantages of Phillips’ ROI Model

This model provides a financial focus, making it a valuable choice for organizations looking to quantify the monetary returns on training investments.

It aligns training with specific business objectives, simplifying the demonstration of its value to stakeholders.

By evaluating multiple levels, it offers a holistic view of the training process, encompassing reactions, learning, application, and financial returns.

Limitations of Phillips’ ROI Model

Collecting data on ROI can be resource-intensive and time-consuming, requiring access to financial and performance data.

The complexity of calculating ROI means it may not always yield precise results, as isolating training as the sole factor affecting business outcomes can be challenging.

This model predominantly emphasizes financial outcomes, potentially overlooking other valuable non-financial impacts of training programs.

Brinkerhoff’s Success Case Method

Evaluating training is not just about the good stuff that happened – the failures are important, too!

With this in mind, one of the outstanding features of the Brinkherhoff method is that it takes a close look at the successes and failures of every training program.

How it Works

Brinkerhoff’s Success Case Method is a pragmatic and qualitative approach to training evaluation.

It provides information about successes and areas that need improvement. The method involves identifying individuals or groups who have notably succeeded or faced challenges after training.

Researchers conduct in-depth interviews, surveys, or assessments to gather data about their experiences. Successes and difficulties are then compared and analyzed to understand why the training worked for some and not for others.

This qualitative exploration helps uncover valuable insights about the training’s effectiveness and its real impact on participants.

Advantages of Brinkerhoff’s Success Case Method

This method’s strength lies in its ability to capture nuanced, context-specific details that quantitative methods might miss.

The qualitative nature of the method makes it more accessible and cost-effective than some quantitative models. It’s a highly a practical choice for organizations aiming to gather rich, real-world insights.

Limitations of Brinkerhoff’s Success Case Method

The method’s qualitative nature can result in findings that are more challenging to quantify and compare across different cases.

It relies heavily on the skills and objectivity of researchers, which can introduce subjectivity into the evaluation.

Additionally, it may not provide the same level of statistical rigor and generalizability as quantitative models, limiting its ability to draw broad, data-driven conclusions about training effectiveness.

The CIPP Model (Context, Input, Process, Product)

This holistic model provides a detailed and comprehensive view of training effectiveness. It grants organizations the flexibility to customize their evaluation processes to align with their unique needs, driving continuous improvement.

How it works

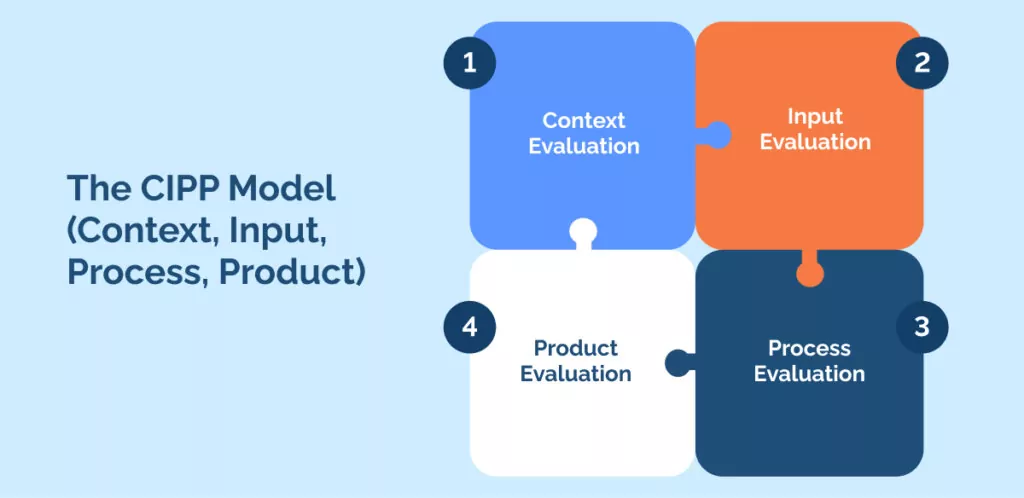

The CIPP Model, created by Daniel L. Stufflebeam, is a comprehensive evaluation framework that examines various aspects of a training program. It operates through four interrelated components:

- Context Evaluation

This phase assesses the contextual factors that influence the training program, such as the organizational environment and needs. It helps in identifying the broader setting in which the training is designed and delivered.

- Input Evaluation

Here, the model focuses on the resources and materials used in training, including curriculum, trainers, and learning materials. It assesses the adequacy and appropriateness of these inputs.

- Process Evaluation

The model examines the training process, assessing how well it is implemented and delivered, as well as whether it meets the objectives. It involves observations, surveys, and other methods to evaluate the quality of the training process.

- Product Evaluation

This phase assesses the outcomes of the training program, including the skills and knowledge acquired by participants. It measures the effectiveness of the training in achieving its goals.

Advantages of The CIPP Model

The CIPP Model offers a holistic and all-encompassing perspective of training programs, ensuring that all crucial aspects are considered.

Organizations have the flexibility to tailor the evaluation process to their unique needs, enabling them to focus on the areas most pertinent to their training objectives.

The iterative nature of the model promotes continuous improvement, as it identifies strengths and weaknesses throughout the various phases of the training program.

Limitations of The CIPP Model

While offering comprehensive insights, the CIPP Model can be resource-intensive, demanding considerable time, personnel, and financial resources to conduct an extensive evaluation.

Due to its comprehensive nature, the model may entail complex data analysis and reporting, which could be time-consuming.

Furthermore, some subjectivity may be involved in the interpretation of results, particularly in the context and input evaluation phases, potentially leading to varying conclusions.

Holton’s Evaluation Model

Holton’s model ensures a well-rounded perspective on training effectiveness.

It becomes a strategic choice for organizations aiming to harmonize their training with their overarching mission and reap tangible benefits within the workplace.

How it works

Holton’s Evaluation Model, developed by Elwood F. Holton III, is a comprehensive framework designed to evaluate training and its effectiveness. It is based on four key components:

- Training Needs Assessment

The process begins with identifying the specific needs and objectives of training. Organizations define what skills, knowledge, or competencies employees should acquire.

- Training Design and Delivery

The model emphasizes the importance of designing and delivering training programs that align with the identified needs. This phase involves selecting appropriate content, methods, and resources for effective training.

- Learning

The focus here is evaluating the extent to which participants acquire the intended knowledge and skills during the training. Pre- and post-assessments are commonly used to measure the learning outcomes.

- Transfer of Learning

Holton’s model pays particular attention to the application of learning in the workplace. It assesses how well participants utilize their newly acquired skills and knowledge in their actual job roles.

Advantages of Holton’s Evaluation Model

A significant advantage of Holton’s model is its holistic approach, covering the entire training cycle from needs assessment to the practical application of learning.

It underscores the importance of the transfer of learning to the workplace, ensuring that training leads to tangible, on-the-job benefits.

Moreover, the model’s emphasis on aligning training with organizational goals ensures that training investments directly support company objectives, making it a strategic choice for organizations seeking clear links between training and their broader mission.

Limitations of Holton’s Evaluation Model

Implementing Holton’s model can be resource-intensive, as it necessitates meticulous data collection, analysis, and ongoing assessments throughout the training process.

The comprehensive approach of the model may involve complex evaluation processes, which can be challenging for organizations with limited resources or expertise.

Furthermore, Holton’s model, while evaluating learning and transfer, may not focus as extensively on specific business outcomes as some other models, potentially leaving some financial and operational impacts unaddressed.

Choose your model wisely for strategic training success

Effective corporate training will be a cornerstone of the future of work. As new technology gains place in the workplace, it’s essential to have the right know-how from your staff.

For example in 2023, KPMG reported that the lack of AI specialists was a particular pain point for adopting generative AI in business.

Training, therefore, will continue to have an important place in every business. And with training, comes the need to evaluate training programs thoroughly and clearly.

Whatever your key performance indicators, one of the five core learning evaluation models in this article could help make a difference for your business.

It’s not easy to align your targets, training methods, and evaluation models. But if you’re among the 7 out of 10 L&D professionals who are feeling the pressure to demonstrate their impact, you’ll have to find a way to make it work!

There are, of course, other ways to ensure that you’re getting the full value out of your training programs.

For example, a digital adoption platform like WalkMe can deliver focused training interventions – and then monitor and evaluate the outcomes. The data it creates works with whatever learning model you’ve chosen to implement.

FAQs

How many different types of training evaluation models?

Even for simple situations, finding the right evaluation model is a tricky business.

There are many well-developed, established models that trainers can use. Although not an extensive list, examples include:

- Kirkpatrick’s Four Levels of Evaluation

- Phillips’ ROI Model

- Brinkerhoff’s Success Case Method

- CIRO (Context, Input, Reaction, Outcome) Model

- CIPP Model (Context, Input, Process, Product)

- Anderson’s Model of Learning Evaluation

- The Johari Window (Luft and Ingham)

- Kaufman’s Five Levels of Evaluation

- Holton’s Evaluation Model

- SOAP-M (Self, Other, Achievements, Potential, Meta-analysis)

- IBTEM (Impact Based Training Evaluation Model)

- Sales Training Evaluation Model (STEM)

What is the difference between training evaluation methods and training evaluation models?

Training evaluation methods and training evaluation models are closely related concepts, but they serve different purposes and operate at different levels of abstraction.

While training evaluation models provide a structured framework for assessing training programs, training evaluation methods are the specific techniques used within that framework to gather and analyze data.

Popular training evaluation methods include feedback, attendance, behavior metrics, and more, as Statista research shows.

Both are essential for a robust and meaningful evaluation of training effectiveness.

WalkMe Team

WalkMe spearheaded the Digital Adoption Platform (DAP) for associations to use the maximum capacity of their advanced resources. Utilizing man-made consciousness, AI, and context-oriented direction, WalkMe adds a powerful UI layer to raise the computerized proficiency, everything being equal.